Methods

How do we calculate the street O2O index?

-

1. Observations

"As one of many yelp users, searching food online is part of my exploratory life." However, does popular stores among yelp reviewers really contribute to the livelyhood of physical space?

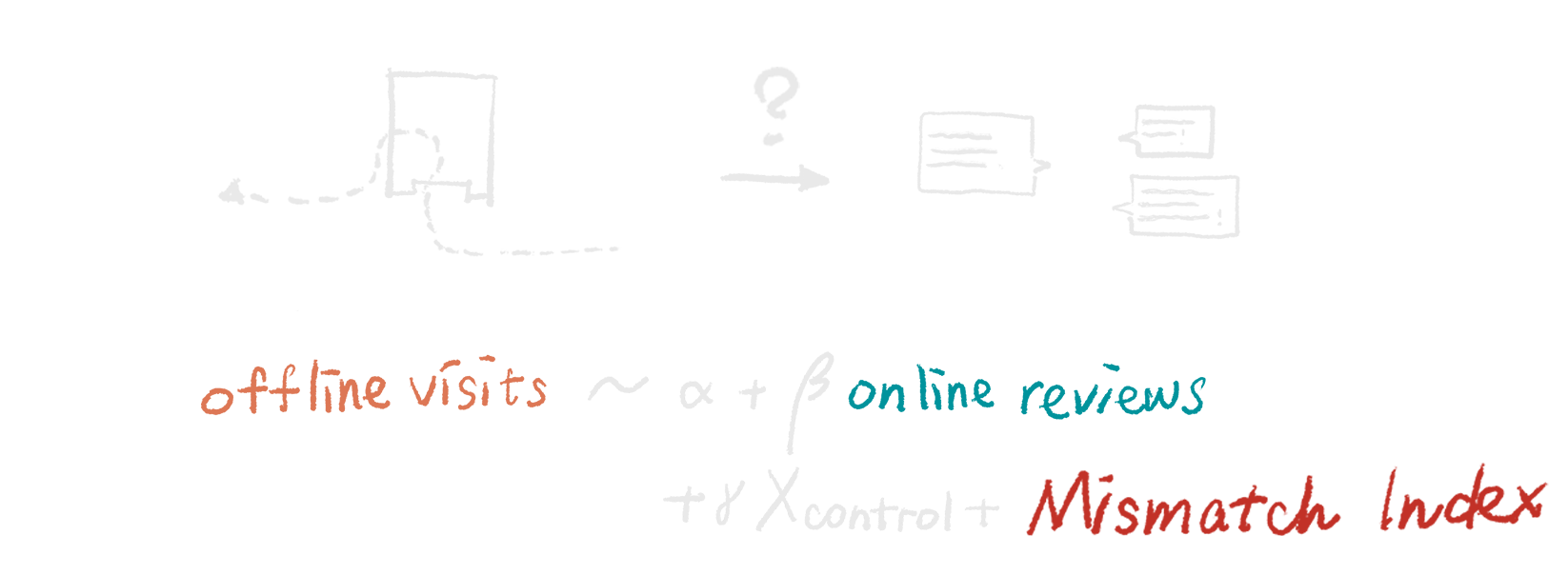

Inspired by this question, we download review data from 15,000+ stores in Manhattan from Yelp, and then we aggregate these reviews by physical street segments. In this way we could compare the aggregated online popularity with the number of actual visits along these streets, here is what we found: 2. Model Construction

To test how much does online reviews actually contribute to physical acitivites, we include other variables in the model.

* Physical Characteristics: Does Beautiful Space Matter?

* Business Diversity and Transit: Jane Jacobs still right?

* Local Business: How are local businesses doing?

* X: We control other variables.

The graph below shows our preliminary results. The height of the bar indicates the significance of each variable (how precise they are), and the length of the bar shows the effectiveness of each variable (how much they contribute to the model). For example, the average star in each street increase 1 unit is associated with around 35% increase in the offline visitor volume. But once the average star goes beyond 3.4, it starts to associate with the decrease of offline visitor volume (refer to the quadratic term).

3. Estimate the Mismatch

This model was able to achieve around 40% R-square, sigh... Then it means there are a lot of variance could not be explained even after we control the neighborhood variables! Therefore, use this model's residual, we define a O2O mismatch index, which is the residual from the OLS model regressing the total street level visit on online reviews (including the number of reviews and the stars) and other built environment factors.

-

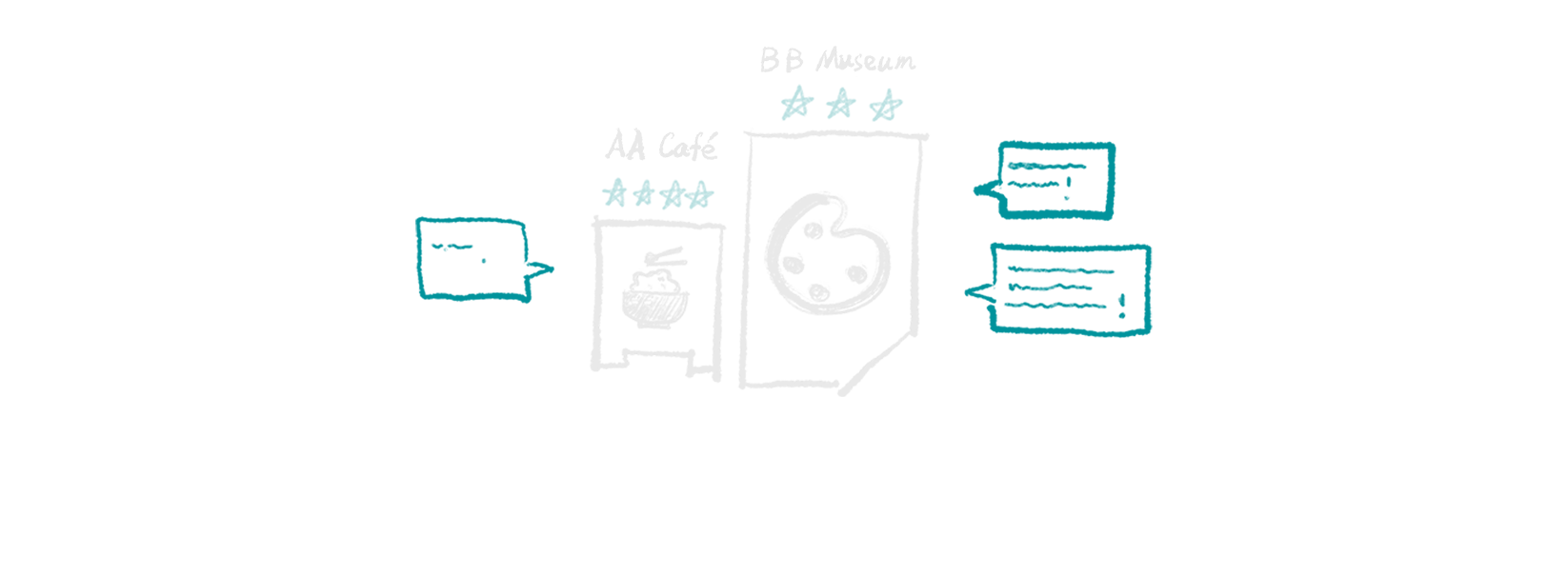

4. Data

We use the SafeGraph pattern data* via the SafeGraph COVID-19 Data Consortium to estimate the offline popularity. See acknowledgement for further detail of the mobility pattern data. We aggregate the POI level visit pattern data from October to December in 2019 for each poi for our analysis. Our review data are downloaded from Yelp. We use the number of reviews, review stars and claimed closed stores for all listings in Manhattan district to estimate the online popularity. To make the two data comparable, we only select businesses that are coded in retail, restaurants, hotels and arts & recreaction according to the official NAICS code (44,45,71,72).

-

5. Estimating Street-level Online Popularity and offline Popularity

All the POI visit (both online and offline) data are aggregated towards the nearest street segments using an R-tree search algorithm.

Using Yelp review data, we aggregate the number of reviews, average stars of each individual business at street segment level.

-

6. Physical Environment Beauty Index

We use Google Street View images downloaded from 2017 dataset to extract built environment values. This work used a deep learning model created by Zhang et al. (2018). With the model and 6,708 street view images in Manhattan, we predict the beauty index of each images, and then aggregate the beauty score by each street segment. The beauty score was normalized.

-

7. Limitations

We identify there is a difference between the online activity vs. the offline one during the regular time. Does COVID-19 change anything? We are hoping to compare the activity in the past three months as a comparison.

-

8. Acknowledgement

SafeGraph, a data company that aggregates anonymized location data from numerous applications in order to provide insights about physical places. To enhance privacy, SafeGraph excludes census block group information if fewer than five devices visited an establishment in a month from a given census block group.